While ChatGPT and other generative text platforms seem to be the focal points for those of us experimenting with AI, we have a lot of great options for using other AI tools to help with language development.

AI image generators have a ton of potential in the ESL classroom, and practicing descriptive language may prove to be loads of fun for students, too.

Midjourney is one of the image generators that has captured a lot of attention across the internet because of its gorgeous output, but there are other options, including Edu-darling Canva.

Descriptive Language Activities

One of the early fun ways that people play with text-to-image is just by seeing what these bots come up with when you type in a prompt. Most people try to get it to create something weird, like a hotdog punching an alien. Then they try to create photorealistic superheroes. After that, a lot of people lose the novelty and they walk away.

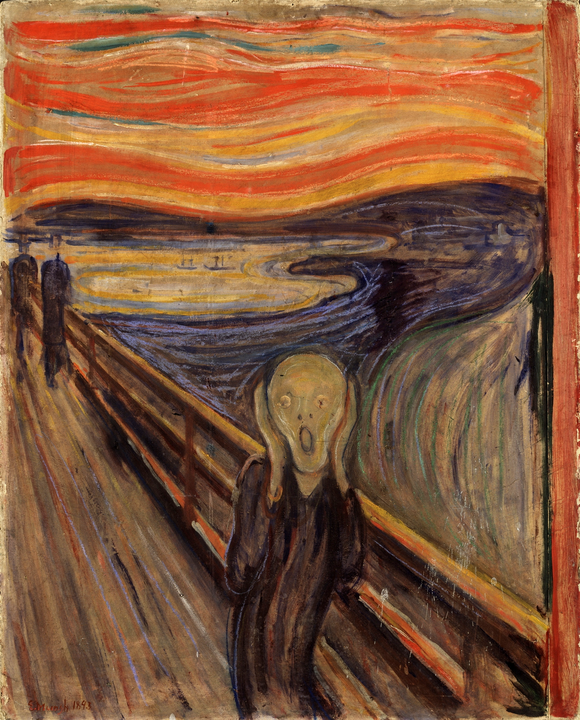

But in the language class, this can be just the beginning. One way to start using these tools with your class is to practice using descriptive language to describe a famous image, then to see how close the AI comes to replicating the image. As an example, let’s use Munch’s The Scream:

Of course your students can choose anything they like – the more freedom of choice they have, the more likely they will be to keep working with their English.

Once students have the image they’d like to duplicate in mind, it’s time to start figuring out how to describe it. This is where the fun comes in. Ask students to use descriptive language to explain the picture in as much detail as possible. They may have to get creative, or raise their hand to ask you for some vocabulary. Here’s what I came up with:

A man, standing on a pier with his hands on his cheeks looking shocked. The man is bald, and wearing black clothes. The art should be in oil paint style with long connected strokes. The background should be in orange tones.

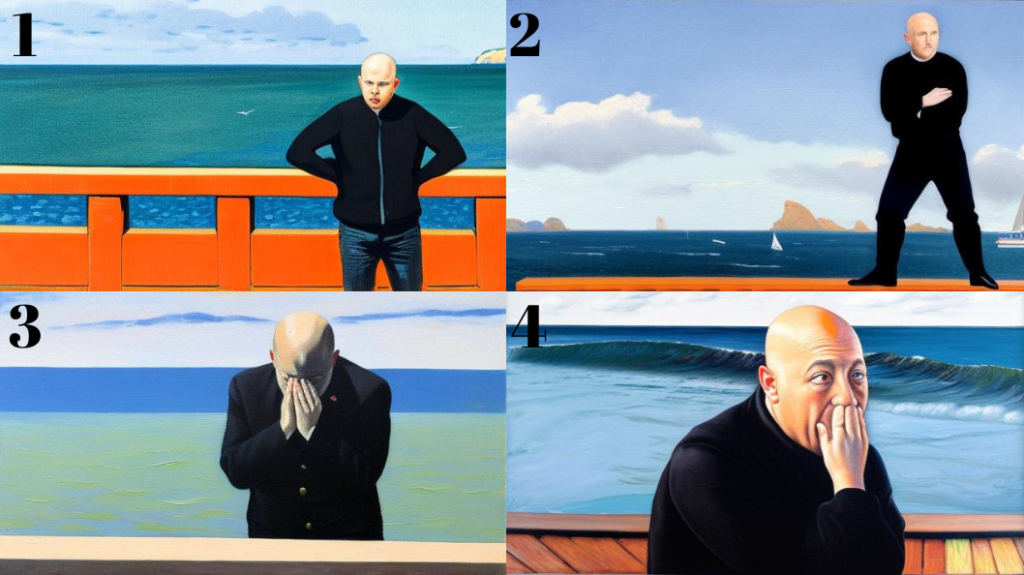

On Midjourney, these are the choices it came up with:

I don’t know about you, but I was pretty impressed. No, it doesn’t look exactly like Munch’s masterpiece, but for a four sentence description, I could certainly argue that it’s in the ballpark.

But trying to get the bot to match the picture is only part of the activity. At this point you can put students into pairs and have them discuss what’s missing. Have them ask each other what language they might have used to try to get it closer to the point. This will create a highly language rich production environment, and you can also have students display their words and the work to vote on which ended up being closest to the original.

Paywalls

The biggest issue with Midjourney is that it’s built on a freemium model, and sooner or later they’re going to come for your money. While I find Midjourney to be the strongest image generation bot at the moment, it’s worth exploring choices that won’t break the bank.

Enter Canva.

Canva also has an experimental feature called “Text-to-Image.” Admittedly, the output is not as good, but you can use it as many times as you like. If you already have a campus Canva account, it’s a no-brainer.

Below I tried the same activity while consecutively updating the prompt to try to get closer and closer:

#1 used the same prompt as above, but then I made adjustments to the prompt and chose the best selection out of each updated generation. Here were my updated prompts for #2, 3, and 4:

#2) A bald man in black, standing on a pier with his hands on his cheeks looking shocked. The pier should start from the front right foreground to the back left background. The art should be in oil paint style with long connected strokes. The background should be in orange tones.

#3) An abstract oil painting of a bald man in black, standing on a pier with his hands on his cheeks looking shocked. The pier should start from the front right foreground to the back left background. The background should be in orange tones.

#4) An abstract swirly orange oil painting of a bald man in black, standing on a pier with his hands on his cheeks looking shocked. The pier should have a vanishing point in the back left of the painting.

Again, these images are further away from the original, but getting a perfect match isn’t the goal. The goal is for students to use language to generate an image, analyze the output compared to their prompt, then make adjustments and try again.

Each of these images allows for students to play with language, but since there is a limit to the text students can enter, there’s a lower sense of personal responsibility to “get it perfect,” and instead they can focus on what else might get closer to matching the original.

Once you get students going, you will likely find your own variations and ways to use AI generated images in your class. You can ask students to see if it can generate a holiday image, and challenge them to see what stereotypical details might be included. You can ask them to try to describe what their room looks like, then compare the generated image with a picture they have on their phone.

Make sure to encourage students based on what they’re putting in, not what’s coming out. From the examples above, you can see that I never got the positioning of the pier right, and most don’t even have the classic “hands on cheeks” despite it being included in each prompt. You can use this as an opportunity to deflect “failures” away from the students’ production and onto the way that AI interprets commands, lowering the affective filter and increasing the fun.

Give it a try, and let us know in the comments what your students came up with!

Leave a Reply