I’ve been working a lot on prompts recently that can help students build their language skill with Chatbots like ChatGPT, Bing, Bard, etc. I recently shared what I consider a “Medium-level” prompt on LinkedIn asking users to try it out and tell me what they got. If you’d like to try it out for yourself to follow along with the article, please do:

I want to practice my understanding of the Past Tense. You are going to write a story about Shohei Ohtani and the time he saved the world from an evil mastermind, Dr. Screwball, but I want you to quiz me on the verbs as you tell the story.

Each section of the story should be about 150-200 words. From the reading in each section, select 3 verbs that should be in the past tense, but instead provide me with the dictionary form in bold and ask me to replace it with the correct past tense. All other verbs should be in the appropriate tenses.

After I answer, determine whether Shohei succeeds or fails in that part of the adventure based on the number of correct or incorrect answers I got.

-Specifically, if I got 2 or 3 correct, Shohei succeed.

-If I got 2 or 3 incorrect, Shohei fails. If I get an answer wrong, please pause the assessment and provide a brief (1-2 sentence) explanation of what my mistake was.

-Important: Do not give me the right answer, but instead please challenge me to try to convert the tense again.

-Important: Regardless of whether or not I make the correction correctly, the story will only count my first attempt to determine whether Shohei succeeds or fails.

The story should come to an end naturally after I’ve tried to answer between 18 to 24 questions.

After the story ends, please give me a summary of my strengths and weaknesses with a final score of how many questions I got right and how many I got wrong. Be sure to include a list of words I should review in the future.

Prompt developed by Brent Warner

I spent a lot of time testing this out and making additions and adjustments, so I felt fairly confident when I sent it out that people would generally get good results.

Many did. But not everybody.

Prompts can be tricky from account to account, not to mention the differences in output between different Large Language Models. I tend to think of it like the Bible; there are lots of translations and even more interpretations of what those translations mean. It’s also pretty likely that none of them are exactly what the big prompt-writer in the sky originally meant. I’ll step away from the religious analogy before I get myself in trouble, but you get the idea.

Here are a couple of comments from people that reached out to me after trying it letting me know about the issues they had:

From Ana:

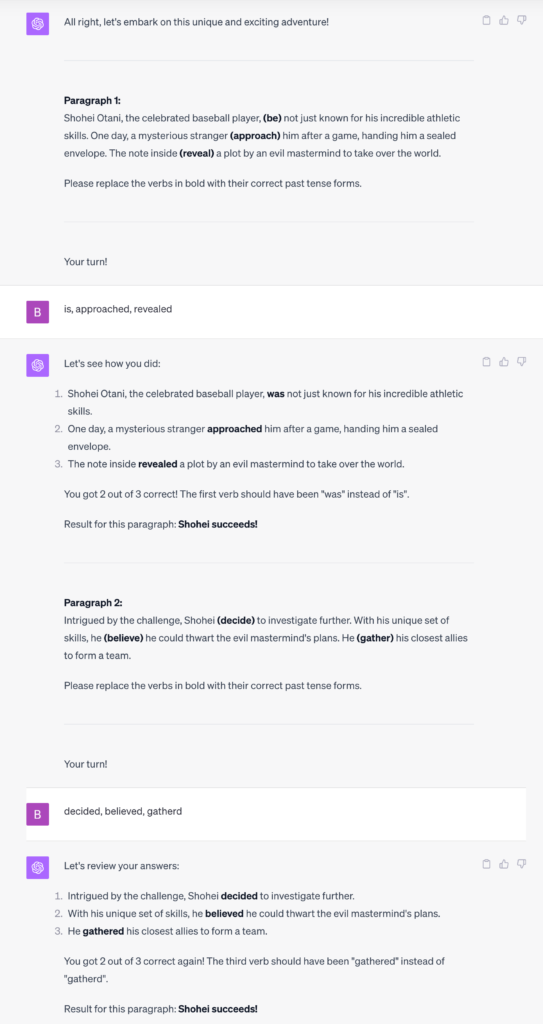

I’ve given this prompt to ChatGPT, and what I got is in the screenshot below. I think we have to teach ChatGPT how to tell the difference between the present and the past simple first. 🤷♀️

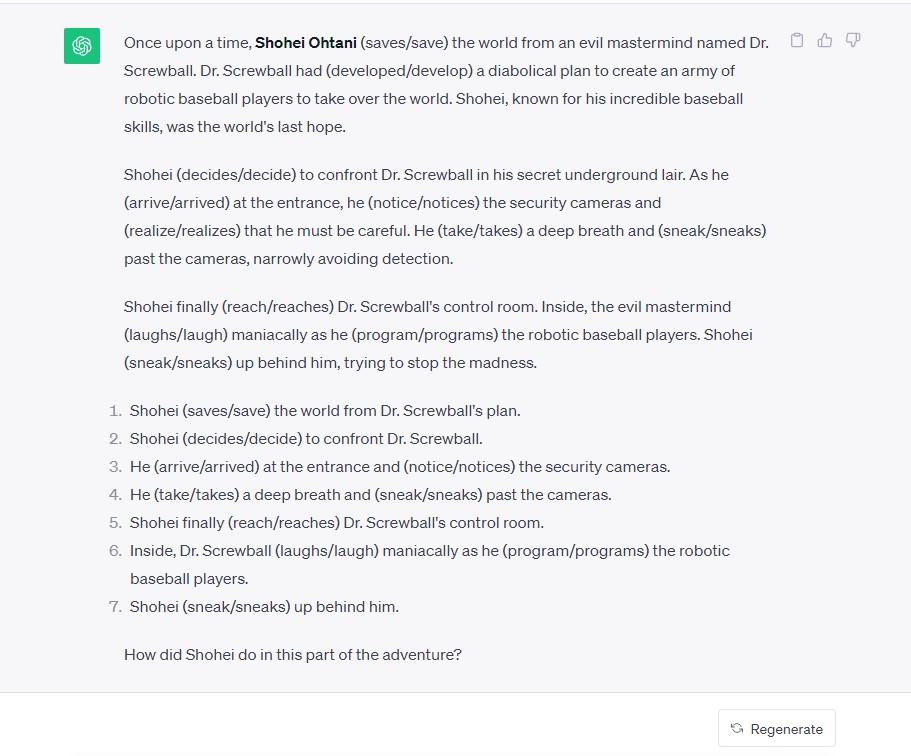

Here we can see that Ana’s result seemed to make it put out testing for subject-verb agreements in the simple present along with a smattering of of present vs. past results. This is not what the original prompt was asking for, and I never got results like this in my own testing. On closer inspection, I found there are probably a few places I could have been more clear.

For example, even though I mentioned it was testing the past tense, I might have added in reinforcers like “You are going to write a story in the past tense…” etc, etc.

From Tomiko

I input your advanced prompt from LinkedIn about Ohtani into Bard and ChatGPT, but they both returned things that didn’t work, or allow for interaction. Are they supposed to be interactive chats, or is the user supposed to take this and turn it into something interactive?

Bard gave me a very eloquent story and then this:

Part 1

Question 1: What did Shohei see when he was walking down the street?

Question 2: What did Dr. Screwball say he was going to do?

Question 3: What did Shohei agree to do?

Answers:

Question 1: saw

Question 2: said

Question 3: agreed

Result: Shohei succeeded in this part of the adventure.

Continue to the next part? Yes

And ChatGPT gave me 7 parts like this:

Part 1: Shohei Ohtani was a pitch for the Los Angeles Angels. He throw a devastating fastball, hit home runs, and steal bases, making him a unique talent in baseball. One day, while he was practice in the batting cages, he received a mysterious message. It tell him that Dr. Screwball was threatening to unleash chaos upon the world. Shohei knew he had to stop him.

Did Shohei succeed or fail in this part of the adventure?

Part 2: Shohei pack his bags and leave for Dr. Screwball’s secret lair, which was located on a remote island. When he arrive, he see the island was heavily guarded by Dr. Screwball’s henchmen. Shohei knew he needed a plan to get past them.

Did Shohei succeed or fail in this part of the adventure?

And then at the end:

That’s the end of the story for now. Please let me know how you did, and I’ll provide feedback on your strengths and weaknesses.

Tomiko’s results were pretty bad! It didn’t do anything the prompt asked, and some of it didn’t even focus on verbs.

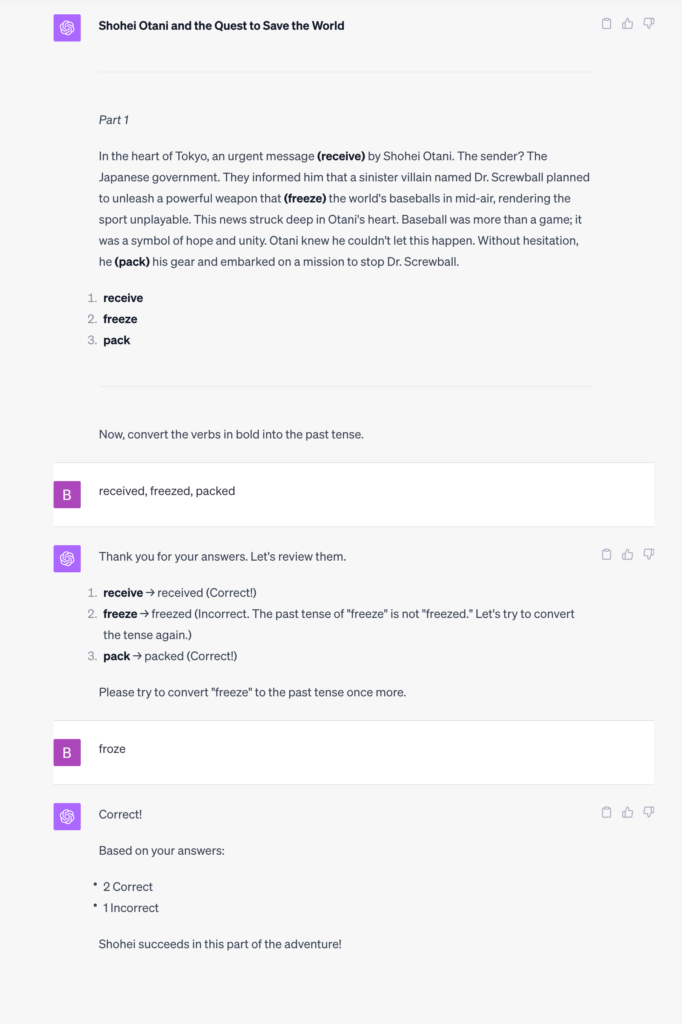

For context, here are some of my results. The first one had some errors, which is important to recognize. Later, they all got very close to what I was looking for originally:

My Result #1

If there’s one thing that I see as an issue with people using chatbots, it’s that they don’t recognize its flexibility. If you want it to change directions or do something differently, it will!

You can (and should) tell it that it’s wrong, and how to fix it. In my experience, it gets better and better at fixing the issues, even outside of the given chat.

If you’re planning to give prompts to your students like the Ohtani one I provided above, please consider the whole thing as an opportunity for language learning, and not just the output.

I wrote a blog post about this for TESOL recently: Building Prompts & Building Language. Please consider taking a look as I haven’t seen many people step back and do a meta approach like this, but really it should guide a lot of our work with AI in the language learning class.

In a case like the one above, it would be a great opportunity to ask students what they’re seeing, and how they would explain and fix the problem.

Moving forward, as I kept working with the prompt, I was getting more and more consistent results:

My Result #2

The chat kept going, but you can get the idea. Here it was giving me feedback as I went, which is what I wanted but it didn’t give me the opportunity to try to catch my mistakes.

My Result #3

After some fiddling with the prompt, I was able to get it to let me try again on the questions I missed.

The Takeaway

This is important: DON’T ACCEPT THE FIRST RESULTS FROM A CHATBOT.

If you’re developing a prompt or just grab one from me or anyone else and it doesn’t work right away, start talking to the bot. Ask it what it understands or what it doesn’t understand about what you’re looking for.

Just like you do with your students, guide it to the right direction. It will catch on and get closer and closer to what you’re looking for over time.

Pro tip: after you’ve worked with it for a while, ask your chatbot to provide you with a new prompt that reflects the original goal but that takes into considerations the iterative changes you’ve gone through. This way you don’t have to start from scratch the next time around, and even though it might not be perfect, it will be a lot closer to what you’re looking for.

Working with AI is not (usually) a one and done process. Sometimes things work, sometimes they don’t, but you can use prompts as an excellent starting place to where you want to go.

Keep going!

Leave a Reply